Zero False Positives in Deep Learning: An Achievable Goal—But One That Could Easily Backfire

Armen Ghambaryan

Lead Deep Learning Engineer

Zhora Gevorgyan

Lead Computer Vision Engineer

What is a false positive?

In deep learning, false positives occur when a model incorrectly identifies something as belonging to a certain class when it does not. While this may seem like a flaw, in some cases, false positives can actually be beneficial.

For example, in medical diagnoses, a false positive may mean that a disease is flagged when it is not actually present. While this might lead to unnecessary testing, it ensures that no potential cases are missed. The consequences of a false negative—failing to detect a real case—could be much more severe, especially for life-threatening conditions.

Similarly, in security systems like fraud detection, a false positive (flagging a legitimate transaction as fraudulent) is sometimes preferred to a false negative (letting a fraudulent transaction slip through). Catching potential threats early, even if it means some extra verification, minimizes risk and prevents costly damage.

In cases where the cost of missing an important signal is greater than that of a false alarm, false positives become a trade-off that we are willing to make. Therefore, while striving for accuracy, deep learning models in certain fields can afford, and may even benefit from, a higher rate of false positives.

At the same time, let us be clear—engineers can’t just grab any open-source model off GitHub, slap it together, and tell customers that false positives are a 'good thing.' That is reckless, and it is exactly how you destroy trust and credibility.

The reasons for false positives

Deep learning models produce false positives due to a variety of underlying factors. These range from issues in data quality and model architecture to challenges in real-world applications. Let’s dive deeper into the primary causes:

1. Imperfect training data Models learn patterns from the data they are trained on. If the training data contains noise, mislabeled examples, or biases, the model may misinterpret certain patterns and produce false positives. For instance, if a medical dataset has many cases where benign conditions are mislabeled as malignant, the model might learn to over-predict diseases. Think you can just slap a green screen on and fake every possible scenario in-house. Think again. Any data scientist worth their salt knows that real-world data, with its messiness and diversity, is irreplaceable. Trying to create it all artificially is a shortcut to mediocrity.

2. Overfitting When a model becomes too specialized in the training data, it may struggle to generalize to new, unseen examples. As a result, it may incorrectly identify patterns in data that don’t actually belong to the target class, causing false positives. Same example with the in-house green screen data.

3. Ambiguous or overlapping features In some cases, classes in the dataset might share similar features. For example, a cat and a dog may both have fur, four legs, and similar shapes, leading the model to mistakenly label a dog as a cat. These overlapping features confuse the model and lead to incorrect classifications.

4. Threshold sensitivity Deep learning models often make predictions based on confidence scores. Setting a low threshold for classification can increase the likelihood of identifying something as positive, even if it is not. This is often intentional, especially in fields like healthcare or security, where it is preferable to err on the side of caution.

5. Model complexity and capacity Some models may be too simplistic for the task or lack the capacity to capture the complexity of real-world data, leading them to make incorrect predictions. Conversely, models with excessive complexity can detect spurious patterns that are not meaningful but still lead to false positives.

6. Data distribution shifts When the real-world data the model encounters is different from the training data (e.g., changes in lighting, environment, or user behavior), the model may misinterpret new patterns, leading to false positives. Same as the green screen example above.

Imperfect training data

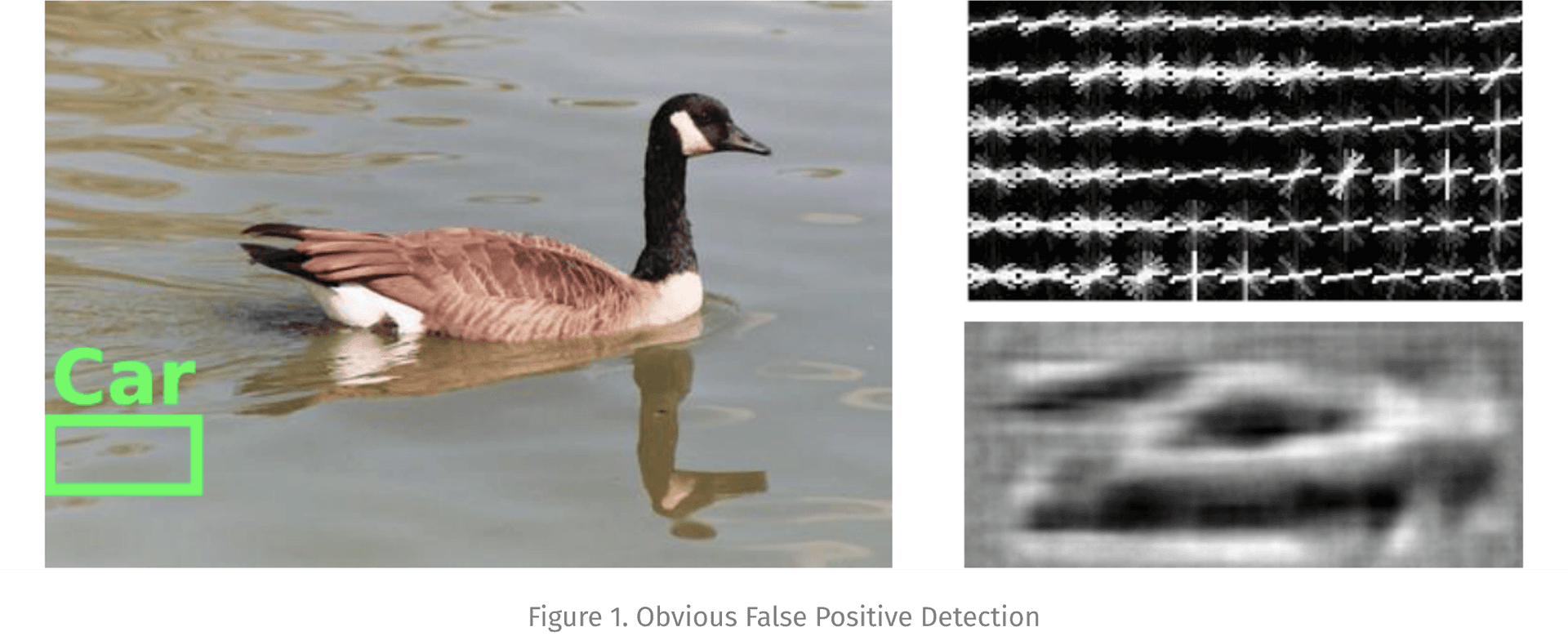

Training data for deep learning models can never be perfect for several reasons related to the nature of data collection, the complexity of real-world environments, and inherent limitations in how data can represent diverse scenarios. The process of gathering data is often subject to various biases, which can skew the dataset in ways that don’t fully represent the real world. For instance, if a facial recognition model is trained primarily on faces of one demographic, it might struggle to generalize to other demographics, leading to errors in real-world applications. The source of the data (e.g., geographic location, cultural context) will inevitably limit the range of examples the model is exposed to. Another crucial point is the fact that the real world is vast and diverse, making it impossible to capture every potential scenario in a dataset. For instance, a self-driving car model trained on urban traffic data may perform poorly in rural environments where the roads, traffic signals, and objects encountered are different. No dataset can perfectly capture every variation of an object, event, or condition in the real world, leaving gaps in the model’s understanding. These gaps can lead to instances where the model exhibits imperfect representational capacity, resulting in false positives that may seem obvious to the human eye. An example of this can be seen in traditional computer vision techniques, such as detecting a car using histograms of oriented gradients (Figure1).

In the filtered example below, after applying model transformations to the input image, the final output resembles a car, even though no visual hint of a car is evident in the original image.

In many domains, some classes or events are much rarer than others (e.g., rarely occurring anomalies, rare types of fraud). These rare cases make up the "long tail" of the data distribution. It’s challenging to gather enough examples of these rare events for the model to learn from. As a result, the model may perform well on common cases but struggle with the rare cases that could be highly important, such as detecting rare security threats or rare complications in medical imaging. It also depends on the data scientist to design the model effectively within limited resources by applying appropriate techniques for training production-grade models. By optimizing the model’s architecture, using techniques such as regularization, pruning, or data augmentation, and balancing computational costs, they can ensure that the model meets the necessary performance and efficiency requirements for deployment in real-world scenarios. Hence, relying solely on open-source datasets and models makes it challenging to achieve the desired performance or scalability. These datasets often lack the diversity or specificity required for production-grade models, and the open-source models may not be fully optimized for the unique requirements of a particular application.

No matter how much effort is put into collecting and curating training data, there will always be imperfections due to noise, bias, subjectivity, data drift, and the sheer complexity of real-world environments. Deep learning models must therefore be robust enough to handle these imperfections, and techniques like data augmentation, regularization, and continual learning are used to mitigate these challenges. However, perfect training data remains unattainable in practice.

Overfitting

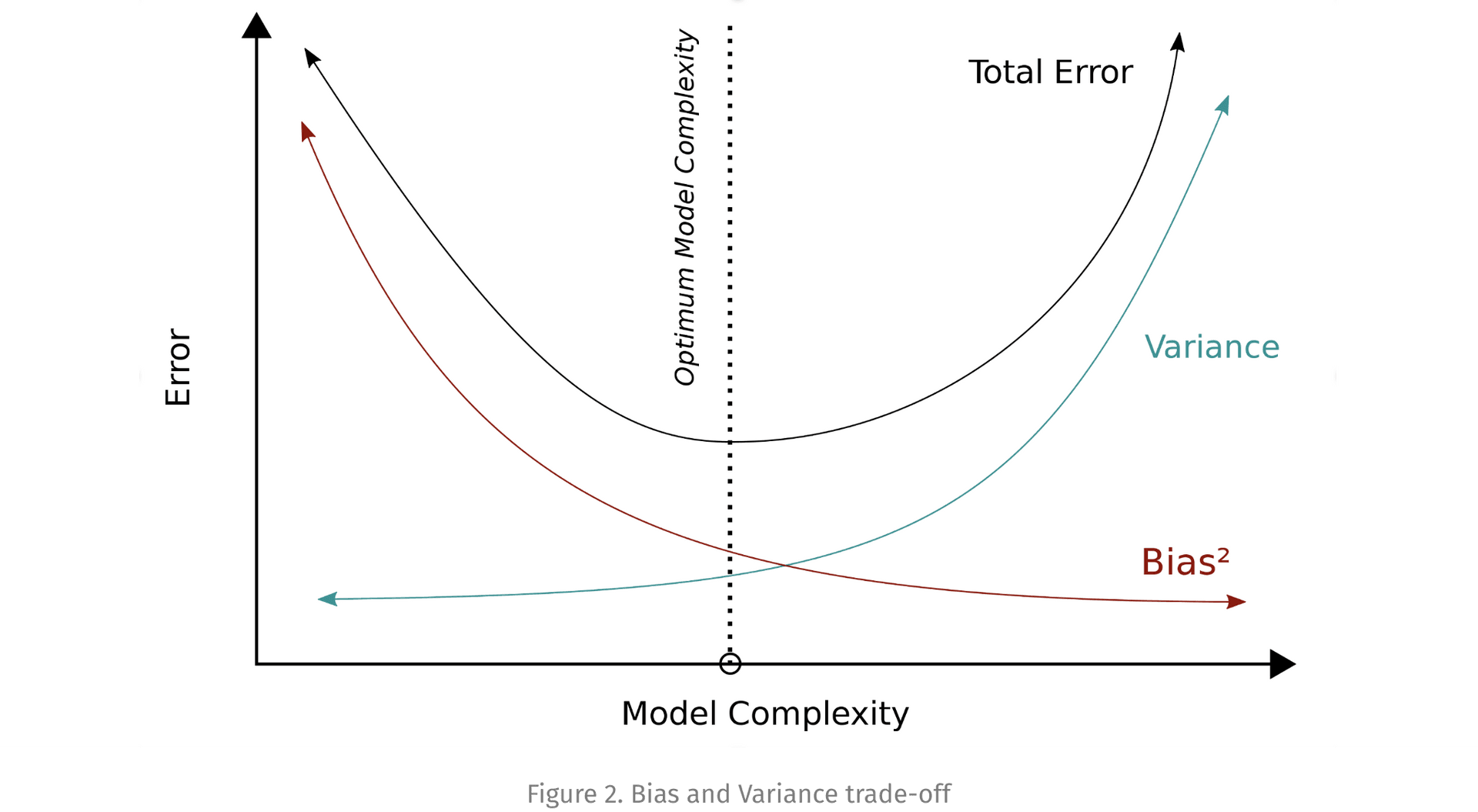

Every deep learning model is, to some extent, overfitted to the training data, including its noise, even with the best regularization techniques in place. The model doesn’t inherently know which parts of the data represent true patterns and which are noise—it simply tries to minimize the error on the training set. Even with extensive regularization, the model will always learn some noise or irrelevant details from the training data. There is always a trade-off between bias (underfitting) and variance (overfitting) (Figure 2). The trade-off refers to the balance between two sources of error in a machine learning model.

The total error for the model could be decomposed into the following parts:

● Bias: This is the error due to overly simplistic assumptions in the model. High bias leads to underfitting, where the model fails to capture the underlying patterns in the data.

● Variance: This is the error due to the model being too sensitive to small fluctuations in the training data. High variance leads to overfitting, where the model captures noise and irrelevant details, making it perform poorly on new data.

The trade-off lies in finding the right level of model complexity: if the model is too simple, it has high bias and low variance; if it is too complex, it has low bias but high variance. The goal is to minimize both, striking a balance that results in the lowest overall error.

As models increase in complexity, they are more likely to overfit the training data. Regularization helps control this, but some degree of overfitting is often inevitable because a model needs to find a balance between fitting the training data well and being general enough to work on unseen data.

Choosing the right architecture and training paradigm for a specific task is crucial. For example, it is clear that large language models (LLMs) are not always suited for vision tasks, highlighting the importance of selecting the appropriate model for each problem. LLMs process sequential text data, whereas computer vision tasks deal with spatial data (images or video). Image data has spatial relationships (e.g., pixels, edges) and requires models like Convolutional Neural Networks (CNNs) or Vision Transformers (ViTs), which are better suited for capturing these spatial dependencies. It ultimately depends on the expertise of the model designers to understand where and how to apply different architectures effectively.

Ambiguous or overlapping features

Ambiguous or overlapping features in training data can cause deep learning models to struggle with accurate predictions, especially when dealing with edge cases where distinguishing between classes is particularly challenging. Here are some examples from image classification, object detection, behavior recognition and facial recognition fields:

Image Classification: Cats vs. Dogs

● Ambiguous Features: Both cats and dogs may have similar facial structures (eyes, noses) or body shapes. In certain breeds, fur color and texture might also overlap.

● Edge Cases: ● Small, low-resolution images where it’s difficult to see distinguishing details. ● Dogs with short fur or cats with unusual fur patterns that resemble those of the opposite species. ● Images where the animal is partially obstructed or curled up, making it hard to discern its species.

Object Detection: Cars vs. Trucks

● Ambiguous Features: Cars and trucks often share similar features, like wheels, doors, and windows

● Edge Cases: ● Small trucks that look like large cars or vice versa. ● Partial images where only the wheels or a small part of the vehicle is visible, making it hard to tell the difference. ● Vehicles viewed from odd angles, where distinguishing features are less visible.

Behavior Recognition: Detecting Physical Altercations vs. Harmless Interactions

● Ambiguous Features: Physical interactions, like sports activities or playful behavior, can often resemble violent altercations in certain situations, making it difficult for models to differentiate between the two.

● Edge Cases:

● Playful Roughhousing: Two individuals playfully pushing each other or engaging in friendly physical contact might be misinterpreted as a fight, as the movement dynamics (fast motions, physical contact) are similar to those of an actual altercation.

● Crowded Areas: In a dense crowd (e.g., at concerts or sporting events), accidental bumps, pushes, or movements could resemble aggressive interactions. Models might have difficulty differentiating accidental jostling from actual fighting.

● Obstructed or Partial View: If part of the scene is blocked by objects or the participants are partially off-screen, the model may not have enough visual information to tell whether aggressive behavior is occurring, leading to misclassification of innocent behavior as fighting.

● Body Language Ambiguity: Raised arms, fast hand movements, or sudden, exaggerated gestures (such as someone waving or jumping) might be interpreted as signs of aggression, even if no actual fight is taking place.

● Verbal Altercations without Physical Contact: A heated verbal argument where individuals are shouting but not physically touching might be hard to distinguish from a physical fight in video footage. The lack of physical interaction could confuse the model into misidentifying it as a non-threatening interaction.

● Sudden Movements in a Non-Fight Context: Someone running quickly toward another person (e.g., a hug or to greet them) may be misinterpreted as aggressive behavior, especially if the approach is fast or sudden, mimicking motions associated with a fight.

Face Recognition: Twins or Siblings

● Ambiguous Features: Twins or close siblings can share many facial features, making it hard for models to differentiate between them.

● Edge Cases: ● Identical twins with only minor differences, like hairstyle or small facial marks. ● Family members who have similar facial structures but different age or gender. ● Faces in shadows or low-resolution images where key distinguishing features are harder to identify.

Threshold sensitivity

By adjusting the model thresholds, we can fine-tune the balance between false positives and false negatives. Reducing false predictions to almost zero by tweaking the threshold has both positive and negative consequences.

Positive consequences of reducing false positives or false negatives to near-zero:

● Increased Precision:

● If the threshold is raised (i.e., requiring higher confidence to classify something as positive), the model becomes more selective, significantly reducing false positives.

● This is often but certainly not always beneficial in some applications of fraud detection, where minimizing false accusations of fraud is critical to avoid unnecessary complications for legitimate users.

● Higher Confidence in Decisions:

● In critical fields like healthcare (e.g., cancer diagnosis), reducing false positives to near-zero ensures that when the model predicts a positive case, the medical team can be very confident in the diagnosis, minimizing unnecessary treatments and patient anxiety.

● Improved User Trust:

● In user-facing applications like spam detection or content filtering, reducing false positives to near-zero ensures that legitimate emails or posts are not incorrectly blocked. This helps maintain trust in the system's reliability.

Negative consequences of reducing false positives or false negatives to near-zero:

● Increased False Negatives:

● By raising the threshold to avoid false positives, the model might miss true positive cases, resulting in more false negatives. For instance, in fraud detection, while fewer legitimate transactions are flagged, actual fraud cases may go undetected, potentially causing financial loss.

● Risk of Under-Detection:

● In security systems (e.g., intrusion detection), increasing the threshold to reduce false positives may lead to missed detections of actual threats, compromising safety.

● Imbalanced Model Performance:

● Reducing one type of error (false positives or false negatives) to near-zero can create an imbalance, making the model overly conservative. For instance, in medical screening, a model that is highly selective may miss early-stage diseases, leading to delayed diagnoses.

● Over-Cautious Predictions:

● If the threshold is set too high to minimize false positives, the model may become overly cautious, refraining from making positive predictions. This could result in fewer overall positive classifications, limiting the model's utility in applications like anomaly detection or early warnings.

Model complexity and capacity

Depending on the dataset size, the more complex models are beneficial for learning much more complex patterns, but there is also a practical limitation of deploying high capacity and complexity models since they often require significant computational power, memory, and time to make predictions, which can be impractical for real-time applications like autonomous driving, mobile devices, or edge computing.

Efficient deployment often requires simplifying models while maintaining reasonable performance to meet resource constraints and time-critical demands.

Data distribution shifts

Distribution shifts are closely tied to the size and diversity of the training data. As mentioned earlier, no dataset can fully encapsulate the true nature of the event of interest. This means there will always be adversarial environmental changes that can degrade a model’s performance over time. Fortunately, continuously updating the models can help mitigate this issue. However, this requires establishing a consistent feedback loop to identify and address these shifts early, preventing potential downstream issues and user frustration.

When dealing with a system that produces many false positives, it's important not to simply accept this as a norm. Excessive false positives often indicate that the vendor may not have optimized the model properly. High rates of false positives can lead to inefficiency, increased manual work, and user frustration. This could be a result of poor data quality, inadequate model tuning, or a lack of robust testing.

It is essential to demand better performance from vendors by ensuring they’ve used the right techniques, such as:

● Improving the data used for training

● Applying appropriate regularization

● Fine-tuning model thresholds

● Ensuring proper validation and testing processes

Settling for a high number of false positives means accepting a poorly performing model, which can be avoided with the right adjustments and optimizations.

The Impact of Large Datasets on ScyllaNet-S Performance

Creating large custom datasets can be challenging and expensive. However, this step is crucial in designing effective applications that perform well in the real world, not just the lab.

Read moreBack to the practical implications of false positives

False positives from deep learning models in video analysis are often less frustrating compared to the alternative of manually reviewing 24/7 footage, for several key reasons:

1. Human Limitations: It's impossible for a human officer to monitor even a single video stream continuously without losing focus. Research shows that human attention decreases significantly after 20 minutes of surveillance monitoring, which increases the chances of missing important events. In contrast, an AI system can monitor multiple streams simultaneously without fatigue (briefcam) (SpringerOpen).

2. Efficiency over Manual Labor: Even though false positives might lead to some unnecessary checks, they are far more efficient than missing critical events. AI-powered surveillance systems can quickly sift through vast amounts of video footage, flagging moments of interest for human review. This saves countless hours of labor compared to manually watching footage in real time.

3. Scalability: AI models can monitor multiple camera feeds 24/7, covering far more ground than a human ever could. In large facilities or cities, this scalability ensures comprehensive coverage without the need to hire large teams of officers. A few false positives become a minor inconvenience compared to the sheer scale of work the AI handles.

4. Prevention of Critical Errors: False positives are preferable to missing actual events (false negatives), especially in high-stakes situations such as security or healthcare. Missing an important event due to a lack of attention can have severe consequences, which justifies a few extra checks prompted by false positives.

In summary, while false positives are not ideal, they are far less frustrating than relying on human officers to constantly monitor footage—a task that’s impractical given human attention limits and the overwhelming volume of data to process. With the continuous increase in data and advancements in AI, models are becoming smarter every day, gradually overcoming many performance issues discussed earlier. As a result, AI-driven systems are becoming increasingly appealing from a cost-benefit perspective for large-scale surveillance of events, offering greater efficiency and scalability compared to traditional methods.

Stay up to date with all of new stories

Scylla Technologies Inc needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.

Related materials

ScyllaNet vs. YOLOv8: Evaluating Performance and Capabilities

In the new article from Zhora Gevorgyan, Lead Computer Vision Engineer, learn how the ground-breaking ScyllaNet compares against YOLOv8.

Read more

How AI Video Analytics Helps Reduce False Alarms

Learn how to minimize the number of false alarms to enable monitoring centers improve productivity, optimize resources and reduce costs.

Read more

SIoU Loss: More Powerful Learning for Bounding Box Regression

Our research is suggesting a new loss function SIoU that has proven its effectiveness not only in a number of simulations and tests but also in production.

Read more