A DeepSeek Moment in Computer Vision

Zhora Gevorgyan

Lead Computer Vision Engineer & Co-Founder, Scylla Technologies Inc.

Scylla Technologies Inc. is proud to announce a major milestone: our flagship AI model, ScyllaNet, has secured 2nd place on the globally respected COCO (Common Objects in Context) test-dev leaderboard with its submission on September 24, 2025. Achieving a mean Average Precision (mAP) of 0.66, ScyllaNet now stands shoulder-to-shoulder with the world’s top contenders.

This accomplishment marks a defining moment for lightweight AI in security—echoing the disruptive impact of DeepSeek—by proving that compact, efficient models can match or exceed heavyweight systems. It reinforces Scylla’s leadership in real-time object detection and AI-powered security, paving the way for next-generation computer vision deployments across critical infrastructure worldwide.

Precision and Efficiency Redefined

Benchmarked on COCO—the gold standard for object detection—ScyllaNet delivers strong performance across all object scales:

● Large objects: 0.79 mAP, 0.92 AR ● Medium objects: 0.69 mAP ● Small objects: 0.50 mAP, 0.81 AR (max=100)

These results translate directly to real-world impact: ScyllaNet accurately detects intruders, weapons, and abnormal behaviors even in crowded and complex scenes.

Despite its power, ScyllaNet contains just ~40 million parameters, making it 90–200× faster than models like Co-DETR ViT-L (300M–3B parameters). This leap in efficiency shows how lightweight architectures can deliver both speed and accuracy, unlocking broader deployment for security operations at scale.

At the core of this advancement is Scylla’s proprietary SIoU (Scylla-IoU) loss function, which improves bounding box regression by factoring in distance, angle, and shape. This innovation accelerates convergence and boosts accuracy, outperforming models like YOLOv8 in both speed and precision.

A Turning Point for AI in Security

Earning 2nd place on the COCO leaderboard is more than a technical success—it’s proof that lightweight, efficient AI can lead in a market expected to hit $28.8 billion in AI video surveillance by 2030. ScyllaNet challenges traditional thinking around edge AI, demonstrating that real-time monitoring at scale can be achieved for military bases, smart cities, and critical infrastructure.

This milestone positions Scylla Technologies at the forefront of global security innovation.

Benchmark and Evaluation Protocol

We evaluated ScyllaNet on the COCO test-dev 2025 benchmark using the official CodaLab evaluation server. All results are for bounding-box detection and follow the COCO evaluation protocol, computing mAP across IoU thresholds from 0.50 to 0.95 and Average Recall (AR) across multiple scales and detection limits. The model was trained without external data and evaluated with standard test-time augmentation (TTA).

Overall Leaderboard Standing

We evaluated ScyllaNet on the COCO test-dev 2025 benchmark using the official CodaLab evaluation server. All results are for bounding-box detection and follow the COCO evaluation protocol, computing mAP across IoU thresholds from 0.50 to 0.95 and Average Recall (AR) across multiple scales and detection limits. The model was trained without external data and evaluated with standard test-time augmentation (TTA).

| Rank | Team / Model | AP@[.50:.95] | Parameters / Notes |

|---|---|---|---|

| 1 | CW_Detection | 0.66 | Top-ranked overall AP; fewer metric leads than ScyllaNet |

| 2 | Scylla Technologies Inc. (ScyllaNet) | 0.6612 | Leads 6 of 12 metrics; highest average rank across all metrics (~40M params) |

| 3 | zongzhuofan (SenseTime Basemodel) | 0.66 | ~300M; Co-DETR-style transformer |

| 4 | UBTECH Thinker | 0.66 | Unknown architecture |

| 5 | InternImage-DCNv3 (PJLab & Tsinghua) | 0.655 | InternImage-H variant; best AP_small & AR_large (~1B params) |

Detailed Metric Comparison

ScyllaNet ranks first in AP_medium, AR@10, AR@100, AR_medium, AR_small, and AR@1 (tied), and second in AR_large and AP_large.

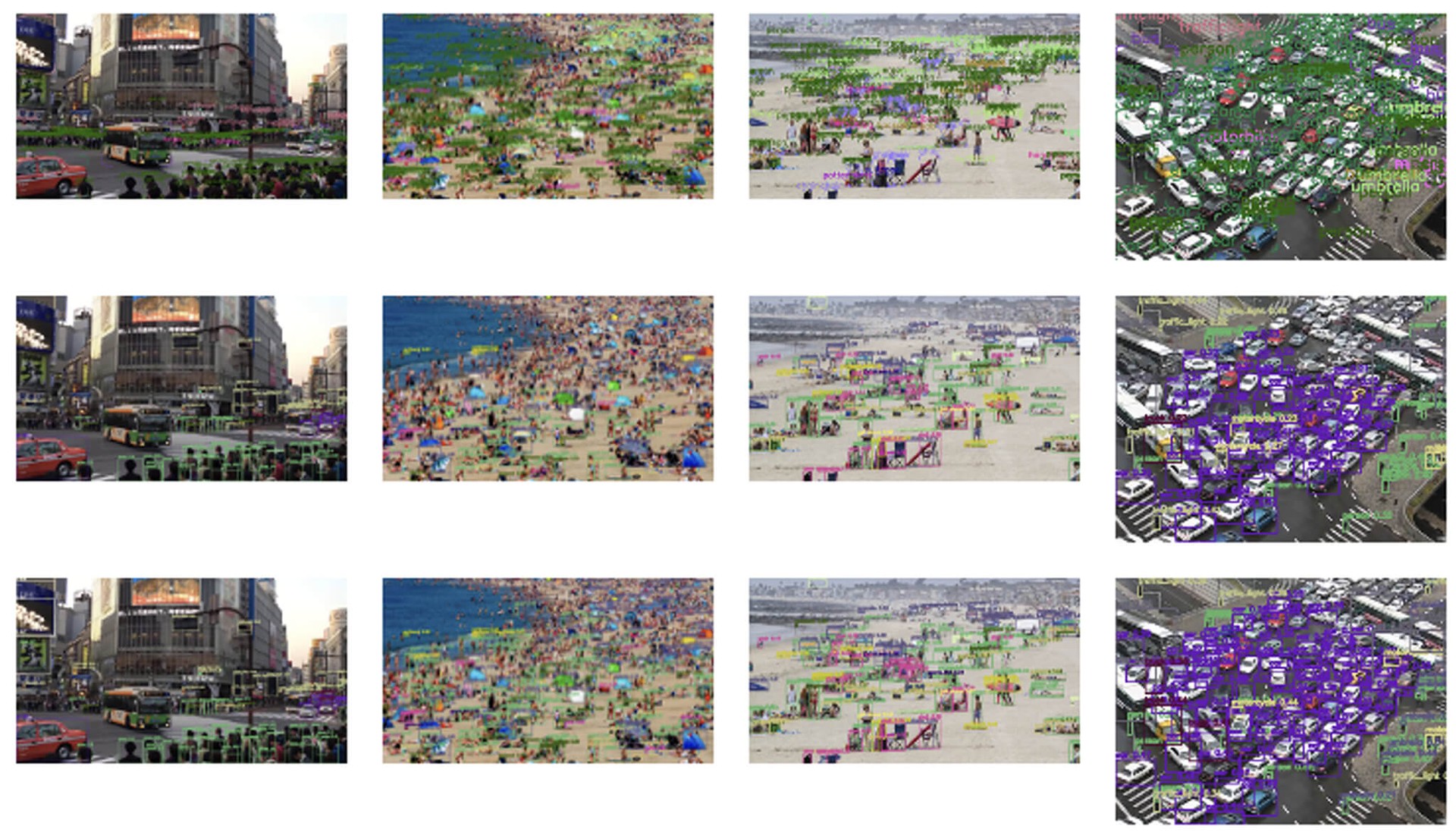

Comparison of COCO test-dev performance: the first row shows ScyllaNet, the second row shows InternImage-H, and the fourth row shows Co-DETR.

Comparative Insights

Compared to CW_Detection:

● Nearly identical overall AP@[.50:.95] (difference < 0.01) ● Slightly lower AP@.50 (–0.01), reflecting a stricter precision regime ● Higher recall across most metrics (+0.01–0.02 on average)

Compared to InternImage and Co-DETR, ScyllaNet achieves similar or better accuracy with 6–8× higher parameter efficiency, thanks to adaptive receptive fields and attention-based feature aggregation—not brute-force scaling.

The figure below compares COCO test-dev performance across models. The first row corresponds to ScyllaNet, the second row to InternImage-H, and the fourth row to Co-DETR.

Conclusion

ScyllaNet’s submission ranks 2nd overall with mAP@[.50:.95] = 66.12%, achieving the highest mean rank across all 12 COCO metrics while using only ~40M parameters. These results underscore ScyllaNet’s architectural efficiency and balanced precision-recall trade-off, establishing it as a new benchmark for lightweight, high-performance object detection.

Stay up to date with all of new stories

Scylla Technologies Inc needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.

Related materials

Improving Safety and Security at Places of Worship

Learn how places of worship can enhance their physical security measures to safeguard worshippers and prevent any damage to sacred spaces.

Read more

St. Gregory Armenian Church

Learn how Scylla AI video analytics is used to protect the Armenian community and ensure safety at St. Gregory Armenian Church in San Francisco.

Read more