How to ensure accuracy and sensitivity of the face recognition system

Armen Ghambaryan, Ph.D.

Lead Deep Learning Engineer

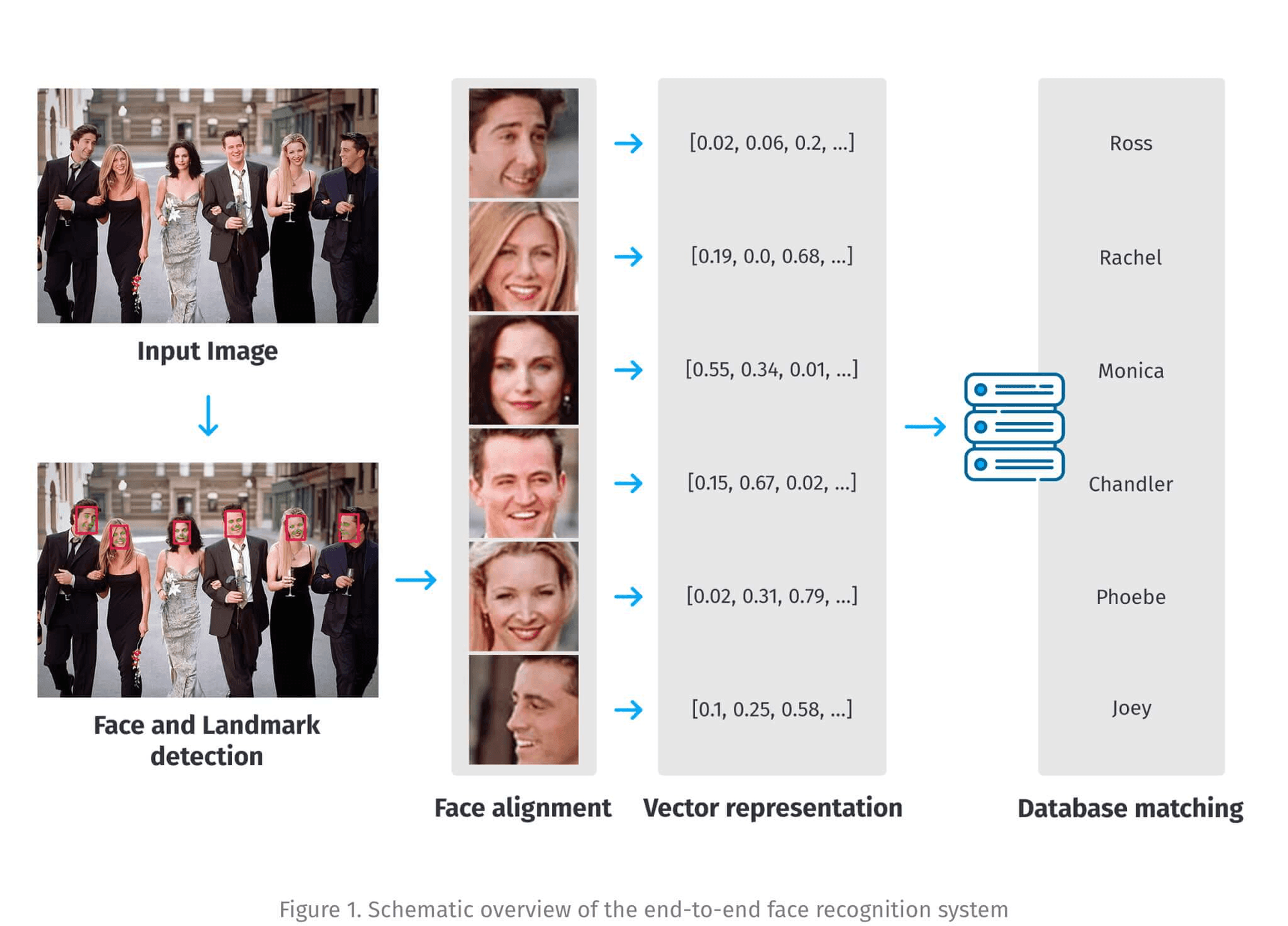

Modern deep learning-based 2D face recognition systems consist of 3 stages: face detection, face alignment, and face representation. Initially, the face/faces on the image are located, then converted to the canonical form through alignment and finally, the vector representation of the face is generated for making the recognition (Figure 1). The accuracy and sensitivity of the system are highly dependent on each of the stages.

The first stage for the end-to-end face recognition system in an uncontrolled environment is face detection. The quality of the predicted face bounding boxes has a significant impact on the overall accuracy of the system. Oversized or tight bounding boxes would result in background noise or information loss which would have a negative impact on subsequent stages of the system. The major challenges for face detection are varying resolutions, poses, illumination, occlusion, and last but not least accuracy-efficiency trade-off. Although tremendous strides have been made in uncontrolled face detection, efficient face detection with a low computation cost to scale the face recognition system while preserving the high accuracy and sensitivity remains an open challenge [1].

Face alignment utilizes spatial transformation techniques to transform the detected faces to a normalized layout. This is usually based on facial key points derived directly from the face detection stage or a separate model. There are also landmark-free methods for face alignment which directly use deep convolutional neural networks to make the corresponding transformation. The keypoint-based approach is more popular due to its simplicity and flexibility, besides the landmark-free alignment won’t be fixed for every image, which is not practical in a real-world application.

The most crucial stage for accurate facial recognition is the embedding generation based on which the similarity score is calculated. This is the stage the article mainly concentrates on since this is where major accuracy drawbacks might come out because of dataset imbalances or sub-optimal training routines. To minimize the drawback first one needs to understand how the face representation models are trained. The key differences lie in the structure of the loss function being used. There are 2 basic methodologies and each of them has its own modified versions: Classification based and Embedding based.

For the first case, face representation learning is being considered as a classification problem with a corresponding softmax-based loss function. The feature embedding-based approach learns the face representation by optimizing the distance between the sample pairs. If the pair belongs to the same person, the distance between the embeddings is minimized, hence the similarity score is maximized. In the case of negative sample pairs, the opposite actions are taken during training. The embedding-based approach with triplet loss lied at the core of training the FaceNet model [2]. Even though the Triplet loss makes perfect sense for face recognition, the sample-to-sample comparisons are local within mini-batch and the training procedure for the Triplet loss is very challenging as there is a combinatorial explosion in the number of triplets, especially for large-scale datasets, requiring effective sampling strategies to select informative mini-batch [2,3], and choose representative triplets within the mini-batch [4,5,6].

Compared with the supervision of classification, feature embedding can save the parameters of a fully connected layer in softmax, which would minimize the hardware requirements for training the model. The latter is especially important when the model is trained on a large dataset including millions of identities. On the other hand, the batch size of training samples limits the performance of feature embedding [7]. There are several methods for making the hard sampling to enrich the effective information in each batch, which is crucial to increase the performance of feature embedding based approach accuracy wise, however, sampling and proxy methods for sample-to-proxy comparison only optimize the embedding of partial classes instead of all classes in one iteration step [8].

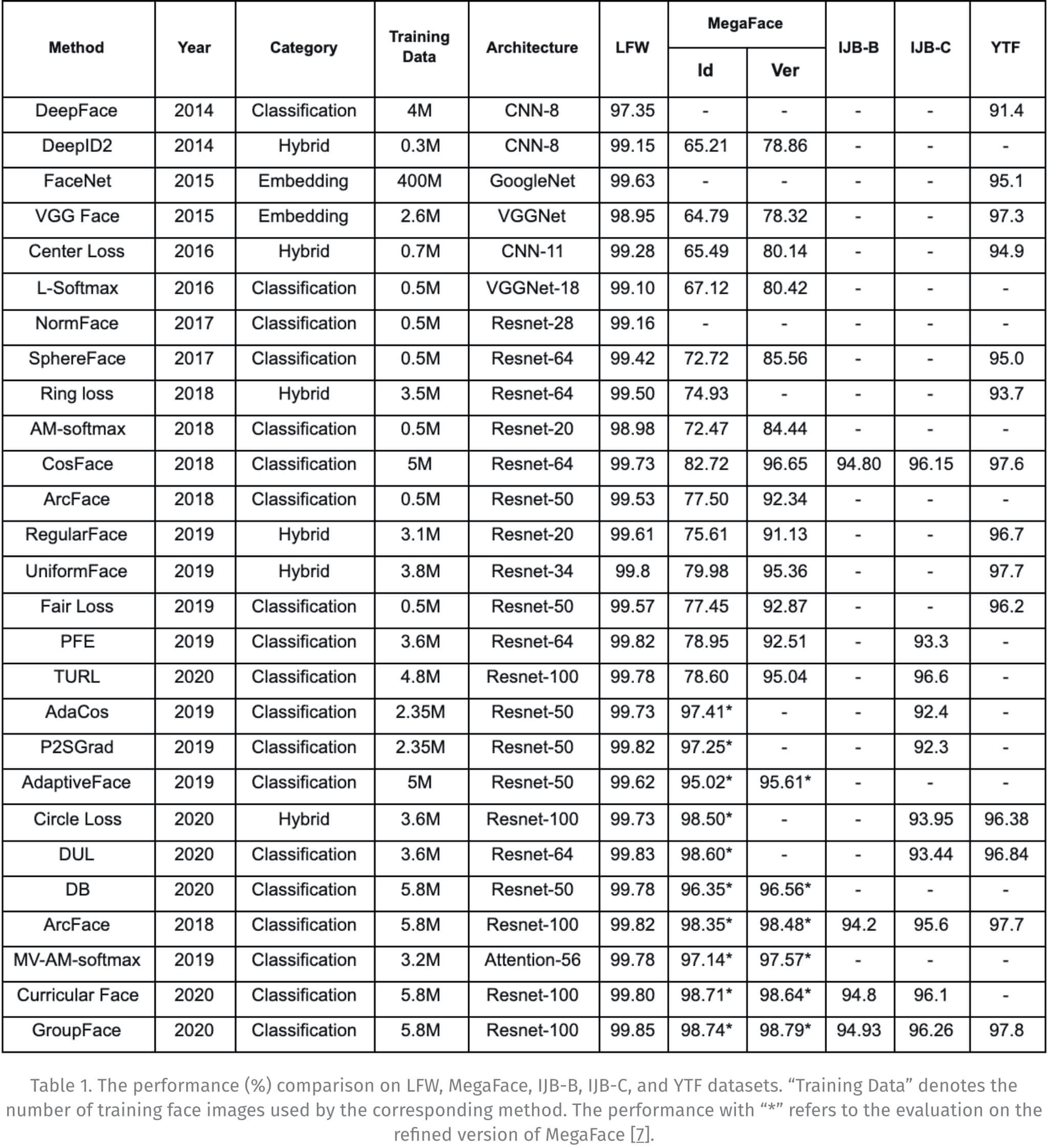

The classification method is generally considered as a more suitable approach for face representation learning since it dominated the state-of-the-art results in face recognition performance benchmarks (Table 1). Compared to embedding-based methods softmax-based approach conducts global comparisons at the cost of memory consumption for holding information on each class identity. Sample-to-class comparison is more stable than sample-to-sample comparison since the number of classes is much smaller than the number of potential sample pairs.

One way to further boost the system performance is to use the hybrid methods that combine the best of two worlds, also taking into account the problem of class imbalances that exist in any large-scale face recognition dataset.

Another important direction to boost the accuracy of the face recognition system is to choose a deep learning model architecture specifically designed for large-scale face datasets. Most of the experiments in the academic community are done using standard resnet like architectures. The recent increases in accuracy figures for imagenet competition using neural architecture search (NAS) suggest, that automatically optimized architectures could also boost the performance of representation learning models both accuracy and speed wise.

The other crucial topic that needs to be covered in detail is the database of faces the models are being trained on. Two major problems associated with a large scale dataset of faces are:

1. Label noise, 2. Class imbalances.

Generally, there are two types of label noise: the first one is an open-set noise, in case of which the true labels are out of the training label set, but are incorrectly labeled to be within the dataset; and the other one is the close-set label noise. The latter occurs when the faces are included in the list of identities the dataset consists of, but are wrongly labeled in between. Close-set noise is generally considered to be more harmful than open-set noise [9]. The semi-supervised training techniques could be used to partially filter out both types of noise. Softtriple and sub-center Arcface are designed to minimize the noise by using the multi-center softmax loss, which defines several sub-centers for the given identity, thus helping to capture more complex geometry of the original data as well as to identify the potentially mislabeled samples [8,10].

The class imbalances occur when certain class or classes are misrepresented in the dataset. Racial and gender imbalances are the ones most discussed and researched recently. This poses a challenge of transparent explanations and solutions for face recognition applications. Hence, to cope with real-world diversity, it is crucial to have a profound understanding of this bias within every aspect [11].

Most of the large-scale face recognition datasets consist of four major racial groups, namely African, Asian, Caucasian, and Indian, and one expects the face recognition performance to be fairly the same for these groups, which is not always the case for many face recognition vendors. As many studies suggest some races are inherently more difficult to recognize even trained on the race-balanced training data. The reason may be that faces of colored skin are more difficult to extract and preprocess for feature embedding generation, especially in dark environments. Hence the group imbalances are further widening the accuracy gap between the groups.

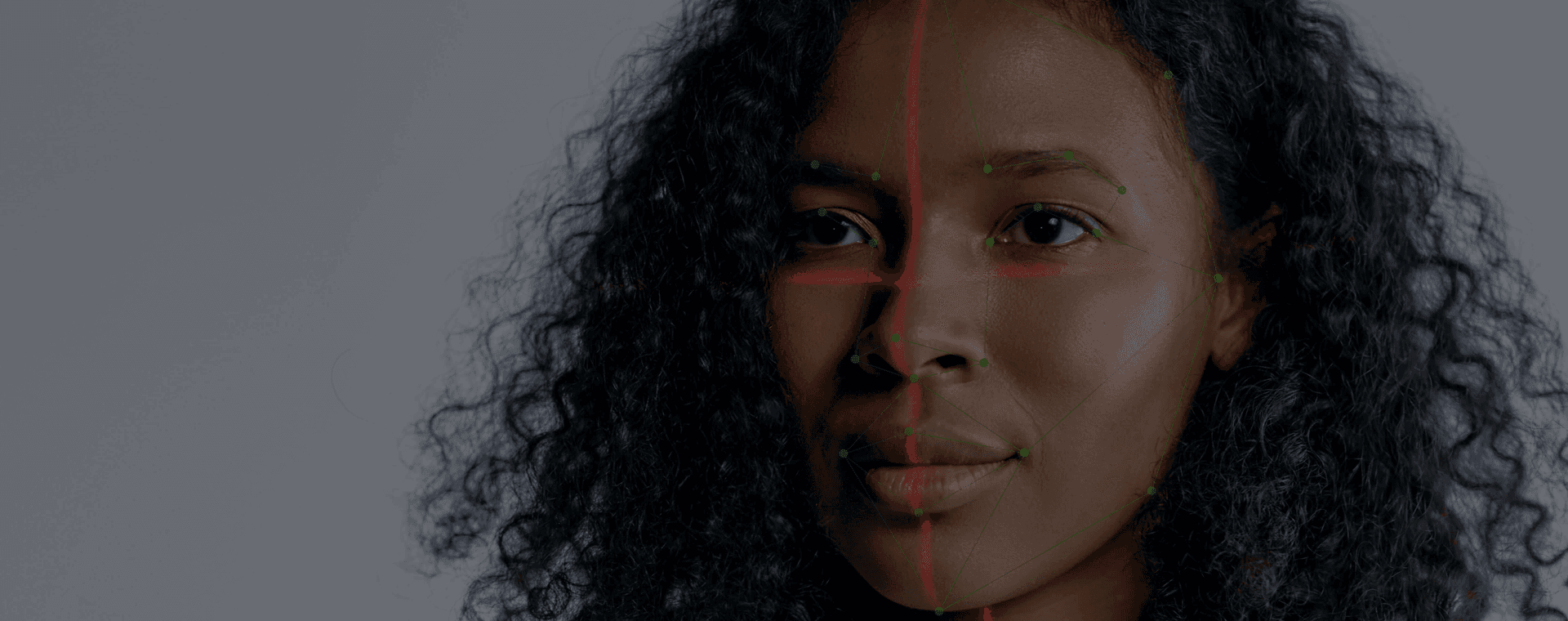

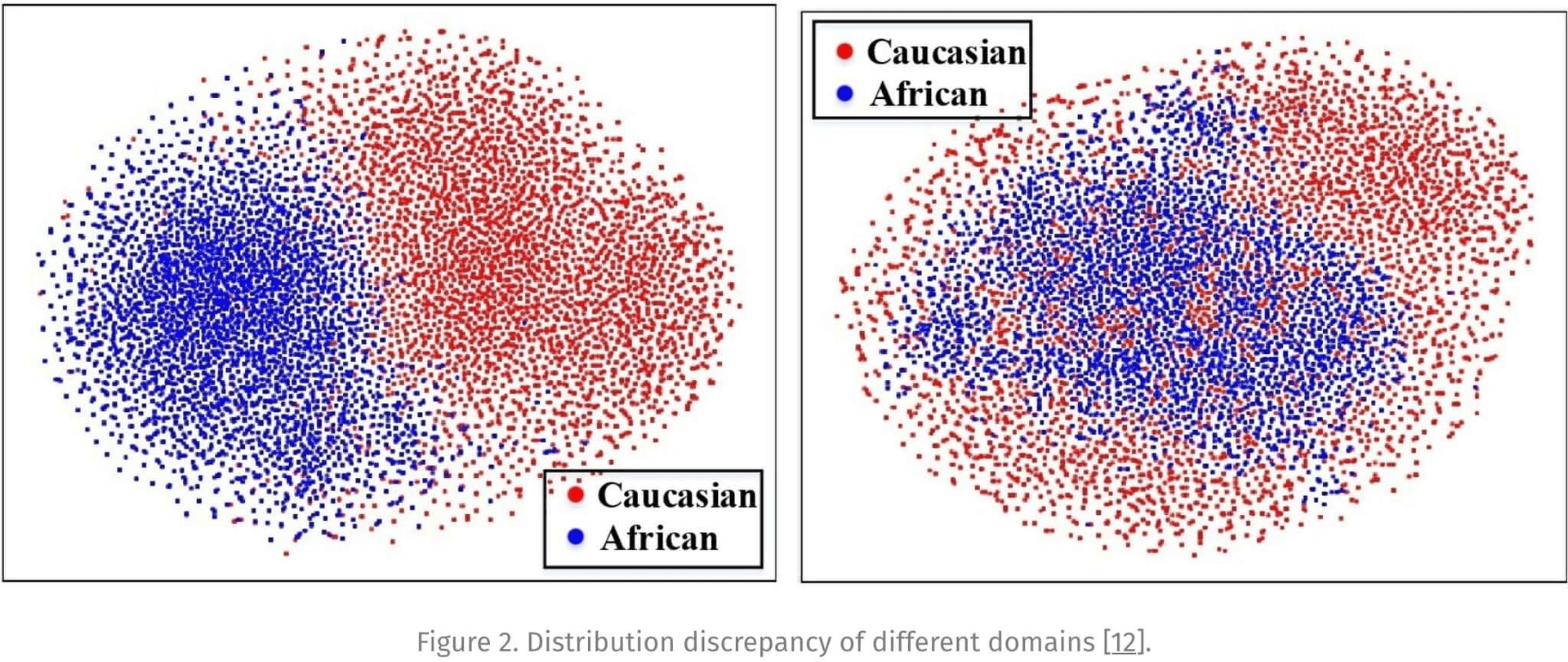

There are several semi-supervised approaches suggested in the academic community to mitigate the performance gaps due to the above-mentioned circumstances. They include adversarial data augmentation to balance over the races as well as unsupervised simultaneous alignment of the global distribution of faces to decrease the race gap at the domain level [12]. The goal of these approaches is to optimize the learning of domain-invariant representations, without the loss of domain-specific information for the model to learn. This results in the face representations that do not heavily cluster into the racial groups (Figure 2). In the work RL-RBN (reinforcement learning-based race balance network), the authors set a mixed margin for the large-proportion races, to achieve balanced performance against the racial bias issue [7,13].

Gender bias is another topic associated with the class imbalance problem. Several studies show that female faces are being recognized with lower accuracy even in the case of training the models with balanced datasets. There are several reasons for this accuracy gap even in the case of the balanced data, which include more varying facial expressions for females, head pose variations, forehead occlusion as well as a facial makeup. One obvious way to improve the accuracy and reduce gender bias is to enrich the existing face datasets with female identities, using semi-automated strategies with gender classification models at their core. Adversarial data augmentation and domain discrepancy minimization as in the case of mitigating the racial bias might also help boost the accuracy of face representation models.

Deep learning-based face recognition systems have achieved remarkable success in recent years. However, there are still some open issues that need to be solved to further boost the speed and accuracy of the applications. For face detection models the speed and accuracy trade-off is an important topic in terms of scaling the system without the loss of current precision figures. Face alignment is another stage that could further improve the quality of recognition. The most important stage still remains the face representation. Designing new hybrid methods for the training as well as neural architecture search for optimizing the models for large-scale face datasets is a direction to look at. Designing training methods to minimize the negative effects of data label noise would increase the accuracy and make the recognition more robust for more extreme environments with low image quality, varying facial poses, and low illumination. Balancing datasets over the races and genders in addition to training procedures to make the models learn generic facial representations in a more efficient way are topics that need further research to improve the accuracy and robustness of the end-to-end system.

1. “Sample and Computation Redistribution for Efficient Face Detection.” 10 May. 2021. 2. “FaceNet: A Unified Embedding for Face Recognition and Clustering.” 12 Mar. 2015. 3. “Metric Learning with Adaptive Density Discrimination.” 18 Nov. 2015. 4. “Sampling Matters in Deep Embedding Learning.” 23 Jun. 2017. 5. “Deep Metric Learning via Lifted Structured Feature Embedding.” 19 Nov. 2015. 6. “Improved Deep Metric Learning with Multi-class N-pair Loss Objective.” 7. “The Elements of End-to-end Deep Face Recognition: A Survey of ….” 28 Sep. 2020. 8. “Sub-center ArcFace: Boosting Face Recognition by Large … - iBUG.” 9. “The Devil of Face Recognition is in the Noise.” 31 Jul. 2018. 10. “Marginal Loss for Deep Face Recognition | IEEE Conference ….” 11. “Trillionpairs” 12. “Racial Faces in-the-Wild: Reducing Racial Bias by Information ….” 1 Dec. 2018. 13. “Mitigating Bias in Face Recognition Using … - CVF Open Access.”

Stay up to date with all of new stories

Scylla Technologies Inc needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.

Related materials

How Drones Are Used to Optimize Physical Security

Industries across the globe are increasingly adopting drone technology to improve safety, drive efficiency and gather data

Read more

Top Physical Security Trends for Manufacturers to Focus on

With physical security being one of the top concerns for manufacturing units, we are exploring ways how factories and plants could level up their security with AI-powered solutions.

Read more

Scylla Gun Detection System integrated with IVISEC

Scylla integrates its AI-powered Gun Detection System with IVISEC for situational awareness, weapon detection and real-time physical threat detection.

Read more